Cheers and Jeers: January 4, 2024

The Education Gadfly 1.4.2024

NationalFlypaper

What we're reading this week: January 4, 2024

The Education Gadfly 1.4.2024

NationalFlypaper

Disappointment and hope: K–12’s biggest stories from 2023

Dale Chu 12.21.2023

NationalFlypaper

The best and worst of education reform in 2023

Michael J. Petrilli 12.21.2023

NationalFlypaper

Fordham’s top 10 stories of 2023

Brandon L. Wright 12.21.2023

NationalFlypaper

15 of the best opinion pieces on education reform that we read in 2023

Michael J. Petrilli 12.21.2023

NationalFlypaper

Fordham’s top 5 podcasts of 2023

Daniel Buck 12.21.2023

NationalFlypaper

Cheers and Jeers: December 21, 2023

The Education Gadfly 12.21.2023

NationalFlypaper

What we're reading this week: December 21, 2023

The Education Gadfly 12.21.2023

NationalFlypaper

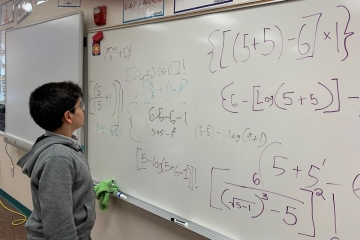

How an early college program in Arizona’s poorest city changes lives: An interview with Homero Chavez

Brandon L. Wright 12.18.2023

NationalFlypaper

3 lessons in transformational leadership

Kathleen Porter-Magee 12.15.2023

NationalFlypaper